I first read about Lean Thinking in the book The Machine That Changed the World, based on MIT’s $5M, five-year study on the future of auto manufacturing. The concept of ‘lean’ embraces ideas like just-in-time delivery, elimination of waste in the system, continuous improvement, and the entire team working together to help streamline the process. At the time, I was studying the application of lean thinking for the construction industry. The rage at the time, which holds true today, is the notion of “design/build”, an approach that expedites project timelines by breaking down stages and having the architects, engineers, and contractors collaborate iteratively. Instead of the entire building being designed upfront, the architect starts handing off plans to and working with the engineers much earlier so they can quickly get the contractors going. It not only gets construction going sooner, but issues that inevitably come up can be addressed midcourse at typically much less cost.

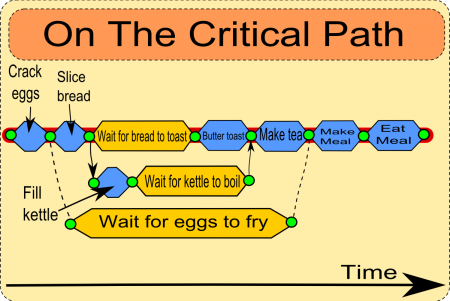

Another subject I studied and actually taught a bit in grad school was Scheduling. For any project, the critical path method of delivery is an important approach to embrace. The basic premise is that each activity in a project is either dependent on the previous activity being completed or it can ‘float’, meaning there is leeway in when it needs to get done to keep the project delivery on-time. The critical path is the shortest amount of time needed to complete all activities that have dependencies. Bottlenecks like hand labeling training data are highlighted and rectified.

When I got into software engineering and adopted Agile, I saw huge similarities between the fundamental principles. Break up the project into manageable pieces, have regular check-ins with stakeholders, retrospect, and plan for the next iteration, reprioritizing based on internal and external factors. I’m seeing data science be slow to adopt this concept, especially when it comes to human labeling efforts. It has become the norm to give a large dataset of examples to a data labeling team and wait until it’s all labeled before even trying to train a model and see the results.

It’s difficult to predict how many labeled examples will be enough to train a machine learning model properly, so don’t wait until all of the examples are labeled. At least check in weekly to pull the labeled examples into a pipeline and gauge results. Another trick that will expedite projects is to use a small amount of pre-labeled examples and bootstrap a classifier that synthetically labels the rest (yes, I’m talking about you, Jaxon). The goal of any labeling effort is to get high-confidence ‘gold’ labels. By gold, I mean pre-labeled examples that will serve as ground truth for model training, testing, and calibration.

Active Learning is another great technique to interweave into the process. With active learning, machine learning models are helping to prioritize the order in which examples get labeled by humans, surfacing the examples that have a higher probability of filling in a gap in model coverage. It’s very common for a model to have skew and labeling efforts are typically more random in what examples get labeled and in what order. The ability to plug these holes with models and/or heuristics is another advantage of taking a more streamlined approach to model training. As discussed in Jaxon’s CTO’s post Dazed and Confused, consider training specialist models – binary classifiers, perhaps – to address just the most problematic boundaries between confusable classes. These might take the form of anything from advanced deep learning models to simple human-coded heuristics – anything that can help discern that specific boundary.

Embrace the concepts of lean thinking, the critical path method, and Agile methodologies. Break up the cycles into shorter spans of labeling to see what impact it will have. Human labelers will hit diminishing returns at some point and you’ll be able to determine when that is much sooner this way. Try machine learning amplification technologies like Jaxon.AI and get better results, much faster, with less resources. Get on the fast track to gold!

– Scott Cohen, CEO