Bringing AI from toy problems to practical applications is a highly iterative process. Or, as we say in the business: trial and error. Throwing, er, stuff, at the wall, over and over again, hopefully each time a bit closer to the target. It’s not just you, that’s the nature of the beast. Data is ugly, problems are ambiguous, and best approaches are often unclear—since you’re not working on toy problems, the road isn’t yet paved (or marked).

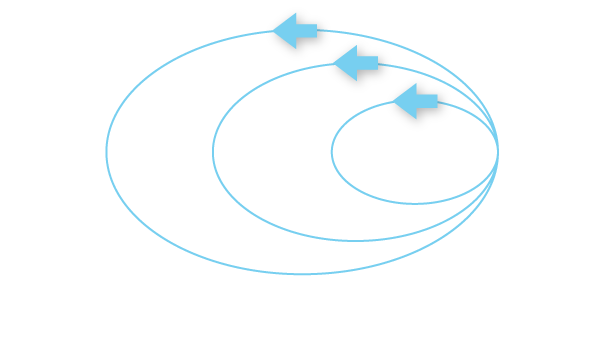

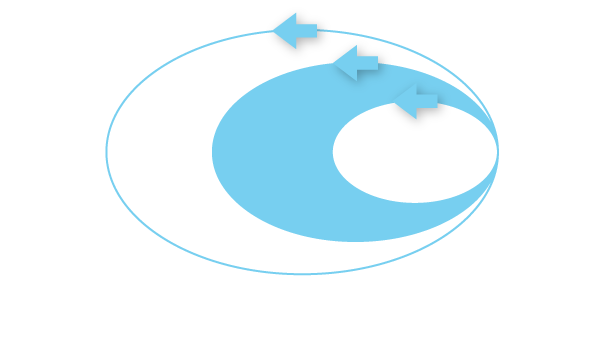

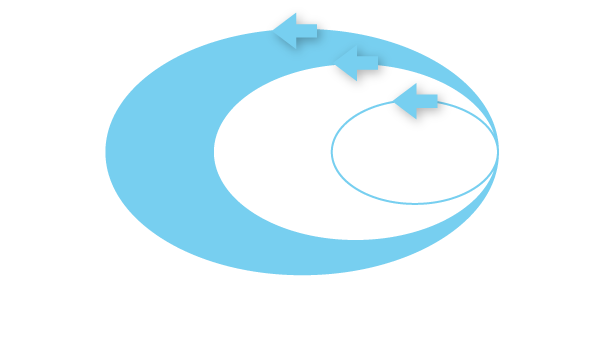

Typically, things go like this:

- Frame the problem you’re trying to solve

- Find some data to support that problem

- Train a model with the data

- Ship it off for deployment. Done.

Ha!

Of course you’re not done. But you knew this wouldn’t end here: the word “iteration” was in the first sentence of this post, after all, and the word “loop” is in the title! So, what exactly to iterate on? We’ve named three things so far: the model, the data, and the problem (aka “task”).

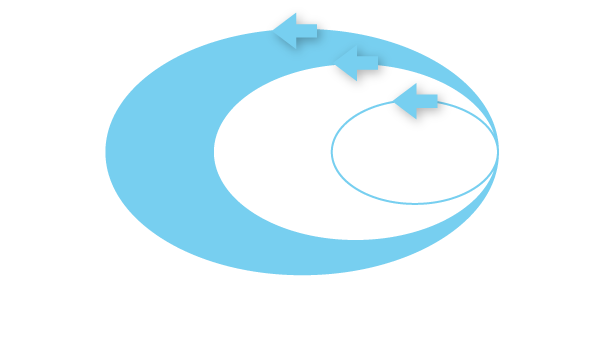

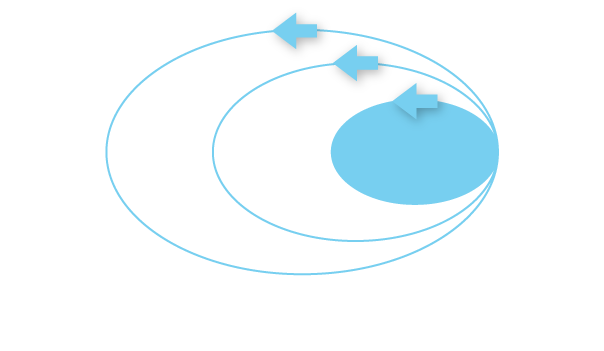

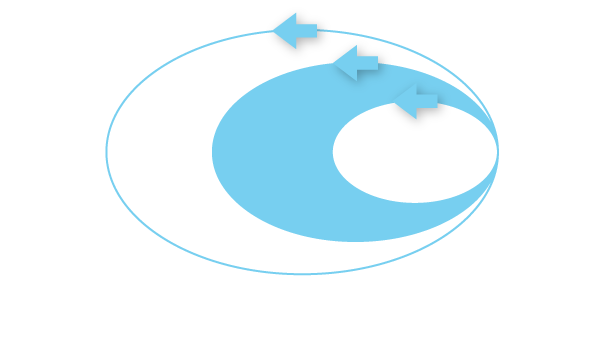

The Data-Centric AI movement makes a strong case for focusing on the data over the model, particularly given the impressive strength of modern, deep learning architectures and pre-trained weights. Models are so good that any reasonable choice of architecture and parameterization is likely to do a good job learning the data, and effort to improve models directly is likely to be met with swiftly diminishing returns.

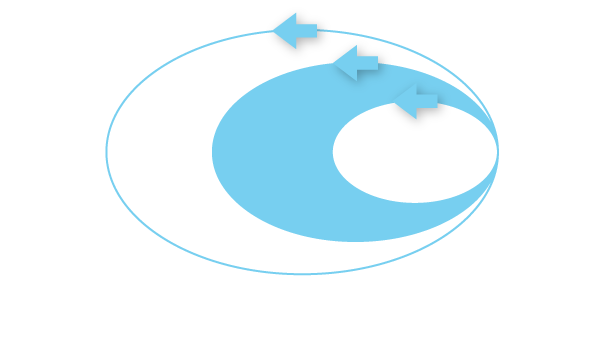

Agreed?! However, this doesn’t go far enough: defining the problem dictates the data and the definition of success. Reframing a problem can yield tremendous benefits. You may not even have to solve that thorny corner case, if it can be avoided. The objective is to solve a business problem – which can usually be framed in a number of ways—not to score well on a rigidly defined toy problem.

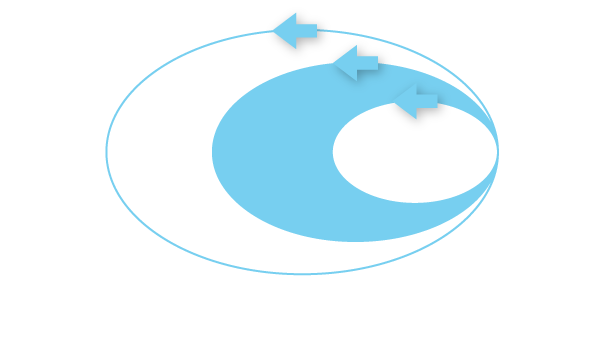

Time to tell a tale to illustrate the process. Since this article has disparaged toy problems, let’s pick a toy problem. Sentiment analysis. Reviews (movies, restaurants, whatever you like!) are always a crowd favorite. Binary classifier. Positive or negative.

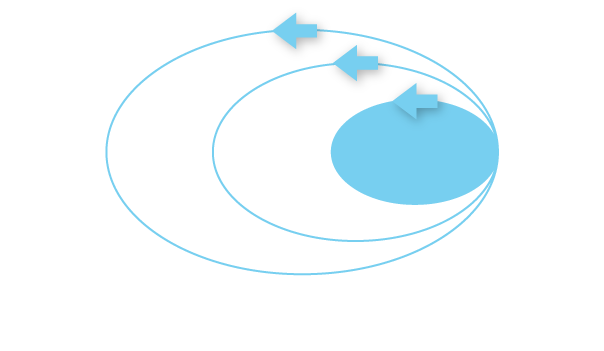

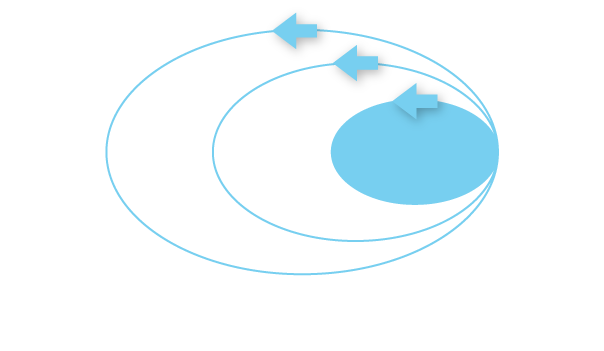

Oh no! The model isn’t good enough. Now what? Back to the data!

But why were there bad models in the first place? The labelers are conscientious and hardworking. Maybe the labeler was you! One possibility is that some reviews are ambiguous; there may be no particular sentiment to be found. “That move lasted 90 minutes, and I ate popcorn” doesn’t really say much about how the viewer felt.

Uh-oh! We don’t have any neutral labels yet.

But what about those original POS and NEG labels? Some of them probably should have been NEU.

And on the story goes, until victory is declared and the model is deployed (Ok fine, then you’ll be iterating on the model, but that’s tomorrow’s problem).

So what’s the moral of the story? Iterate a lot. On the data, and on framing the actual problem. Not just the model.

— Greg Harman, CTO