In 2022, Air Canada’s customer service chatbot promised an imaginary discount to a passenger. Courts ruled that the discount must be honored. In 2023, a California auto dealer was forced to sell a brand new Chevy Tahoe for $1, again because of a deal made by an AI chatbot. Earlier that year, a NY lawyer was sanctioned for citing LLM-fabricated legal precedents.

Effective use of generative AI is hampered by the hallucination problem, and for regulated and high-risk applications can be outright untenable. This isn’t a simple matter of waiting for bigger, better LLMs to arrive on the market. LLMs have a fundamental limitation: they generate tokens, not concepts. Each token has some non-zero chance of veering away from the realm of correct and sensible answers, even while often retaining plausibility. Once the LLM is off the right path, another similar error can occur, and this error compounds.

This isn’t a simple matter of waiting for bigger, better LLMs to arrive on the market. LLMs have a fundamental limitation: they generate tokens, not concepts. Each token has some non-zero chance of veering away from the realm of correct and sensible answers, even while often retaining plausibility. Once the LLM is off the right path, another similar error can occur, and this error compounds.

To realize the benefits that these technologies can bring, we need to bridge the gap from probabilistic token generation to conceptual reasoning.

Jaxon has developed a set of intelligent guardrails through R&D efforts with the U.S. Department of Defense.

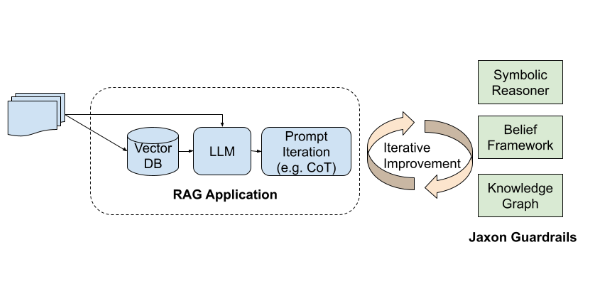

- These guardrails augment “standard-issue” Retrieval-Augmented Generation (RAG)+LLM stacks to provide validation at the conceptual level.

- They function at different levels of assurance, from low-level fact verification to full-rigor formal methods, balancing the required degree of confidence with practical implementation and operational costs.

- Human judgment can be incorporated in a supervisory level in order to balance the practicalities of labor costs with the improvement in outcomes.

One of the key elements to combining these guardrails with LLM applications is the interface between the guardrail and the LLM. Jaxon bridges this gap by taking advantage of the LLMs ability to generate not just English prose, but also data structures and synthetic structured languages such as DSLs. By ensuring that the communication is machine-readable, the guardrails can evaluate LLM outputs without re-adding the LLM error. Results can be likewise passed to the LLM, forming a powerful system for self-improvement.

Choosing Guardrails

| RAG | KNOWLEDGE GRAPH | BELIEF netWORK | SYMBOLIC REASONING |

|---|---|---|---|

| Reduces, but does not eliminate hallucinations | Rich, interconnected data representations | Bayesian inference provides a rigorous method for updating beliefs or hypotheses based on new evidence | Heavy burden of proof |

| Only adds context to an LLM via external data | Static or slowly-evolving knowledge | Builds up degrees of belief based on inferences from evidence with uncertain and missing information | Dynamic knowledge |

| Low entry cost | Strong choice for visualization and discovery | Bayesian learning is most similar to human reasoning | Formal reasoning is required |

Jaxon’s guardrails offer several key contributions as improvements on “vanilla” RAG+LLM systems:

- They combine the creativity of generative AI with the rigor and traceability of symbolic reasoning systems.

- Transparency in processing supports an oracle (possibly a human) who provides ground truth with reduced human effort and engagement.

- Technology choices can be adjusted to the level of assurance required for a specific application, finding the optimal tradeoff between trustworthy outputs and system complexity.

Want to learn more? Contact us!