After you use Jaxon to label your training set and are ready to embark on training classifiers, it might seem that you’re in the home stretch. However, if you want to obtain a high-performing classifier, the journey continues. The data you use for training classifiers is vital, but it turns out that how you use it for training classifiers matters too.

Children learn in a pretty predictable way, at least most of the time. For example, first they will learn to count, then to add, then to do algebra, then calculus, and so on. And unless they have an eidetic memory, they will likely need to see each of these concepts several times in order to properly learn them. If a child hasn’t learned one of the concepts, they can’t learn the more advanced topics that rely on the foundation. Neural networks learn in a similar way—the order and structure of information, as well as repetition, affects their overall accuracy as a classifier.

Jaxon allows for both transfer learning and training schedules for the neural network. For a crash course, let’s start with curriculum training and work our way back to transfer learning.

Once you have labeled data, you can’t just run it through your classifier once and walk away. Neural networks benefit greatly from seeing information many times—just like humans. The order in which the examples are presented can also have an effect. Add multiple training sets to the mix (if you’re lucky enough to have them!), and it can get complicated very quickly.

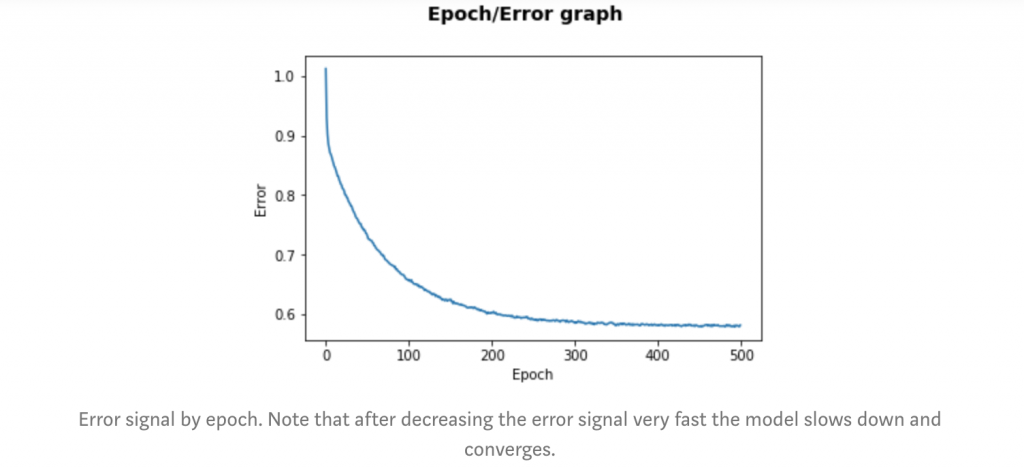

Training the neural net for several epochs allows it to run through the data once, check to see how well it did, refine the parameters, and try again. As the neural net sees the data several times, the parameters will become more and more optimized to that particular dataset. Typically, the F1 score increases over several epochs until the neural net has reached peak performance, but exactly how many are needed depends on the data and neural net used.

Jaxon also provides other training options to increase classifier accuracy. For example, you could train on data sets augmented with synthetic labels. You could also use techniques such as freezing neural net layers for several epochs. This allows task-specific layers to begin learning the dataset before allowing the pretrained foundation to drift. Each of these can greatly increase classifier accuracy. And if you’re concerned about investing the time and energy to manually figure out what will be best for each training set, Jaxon makes these processes easy. Just pick from a list or click a checkbox, and Jaxon can work its magic. Jaxon makes it easy to try these techniques on several different neural architectures.

Transfer learning primes the neural network for the language and patterns it will see. This also allows it to begin setting its parameters for the particular dataset before it even sees any labels. It’s not just a useful boost for when the labeled sets are small; you can see benefits even when there are huge amounts of labeled data. The early examples that the neural net sees have a big influence on the outcome of training. Pretrained neural networks actually learn different features altogether from the labeled training sets than non-pretrained networks.

By making transfer learning so easy to implement, Jaxon amplifies the expertise of data scientists and analysts, enabling them to supervise and focus on the jobs that AI is not (yet!) capable of. By fine-tuning on domain-related datasets as well as on the training data itself, you can develop a very specialized vocabulary that enhances the learning speed and ultimate performance ceiling of a neural model. In the end, Jaxon optimizes model accuracy with great speed and efficiency, allowing classifiers to be used in production much quicker than has ever been possible before.

– Charlotte Ruth, Director of Linguistics