Jaxon's Domain-Specific AI Language

Trust Generative AI for Critical Applications

Knowledge bases tailored to

your data and use cases

Guardrails for new or existing

LLM pipelines

Formal verification ensures

trusted output

AI guardrails impose

user-defined constraints,

ensuring AI outputs remain accurate & relevant.

AI Has a Hallucination Problem

AI can generate information that’s incorrect, even though it might sound plausible. Large language models (LLMs) are just looking at patterns and need guardrails – enter Jaxon’s DSAIL.

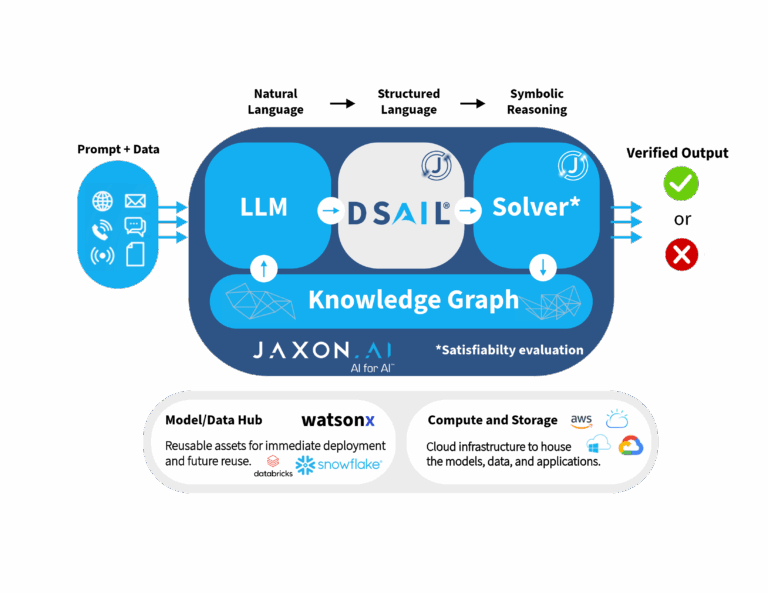

AI Verification Agent

Jaxon’s patent-pending Domain-Specific AI Language (DSAIL) platform has an interchangeable set of modules that provide verification guardrails for generative AI. DSAIL uses ‘formal methods’ to mathematically prove the accuracy of LLM output.

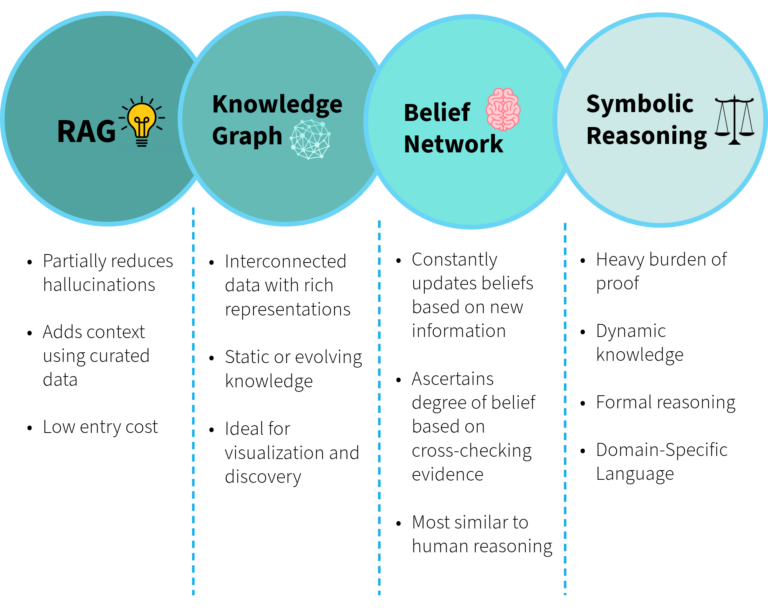

Choosing Guardrails

Jaxon allows users to select the ‘LLM guardrail’ that fits their organizational needs best on a scale of degree of formality vs degree of trustworthiness.

The

Fact Checker

Fact Checker

DSAIL’s ‘Fact Checker’ is at the heart of addressing the hallucination problem. Jaxon’s proprietary DSAIL technology turns natural language into binary that gets run through a gauntlet of checks and balances. This ensures the AI’s response meets all constraints and assertions before being returned – formal verification of its output.

Verification & Validation

Verification confirms that a model operates correctly in real-world conditions, while validation ensures the model accurately represents its intended use. The DSAIL platform provides a set of guardrails to make sure AI systems stay on track and produce accurate responses.

Jaxon’s DSAIL: Tackling AI Hallucinations

A neurosymbolic reasoning layer that translates policy documents into executable logic. These Policy Rules Guardrails run formal checks against AI outputs to determine compliance with rules, laws, or mission parameters – delivering precise, rule-based validation for high-assurance domains.

A family of LLM-powered workflows for automated claim verification. From consistency checks to critique & revise loops, Agentic Guardrails use structured prompting to evaluate, cross-examine, or decompose outputs – all without needing external systems.