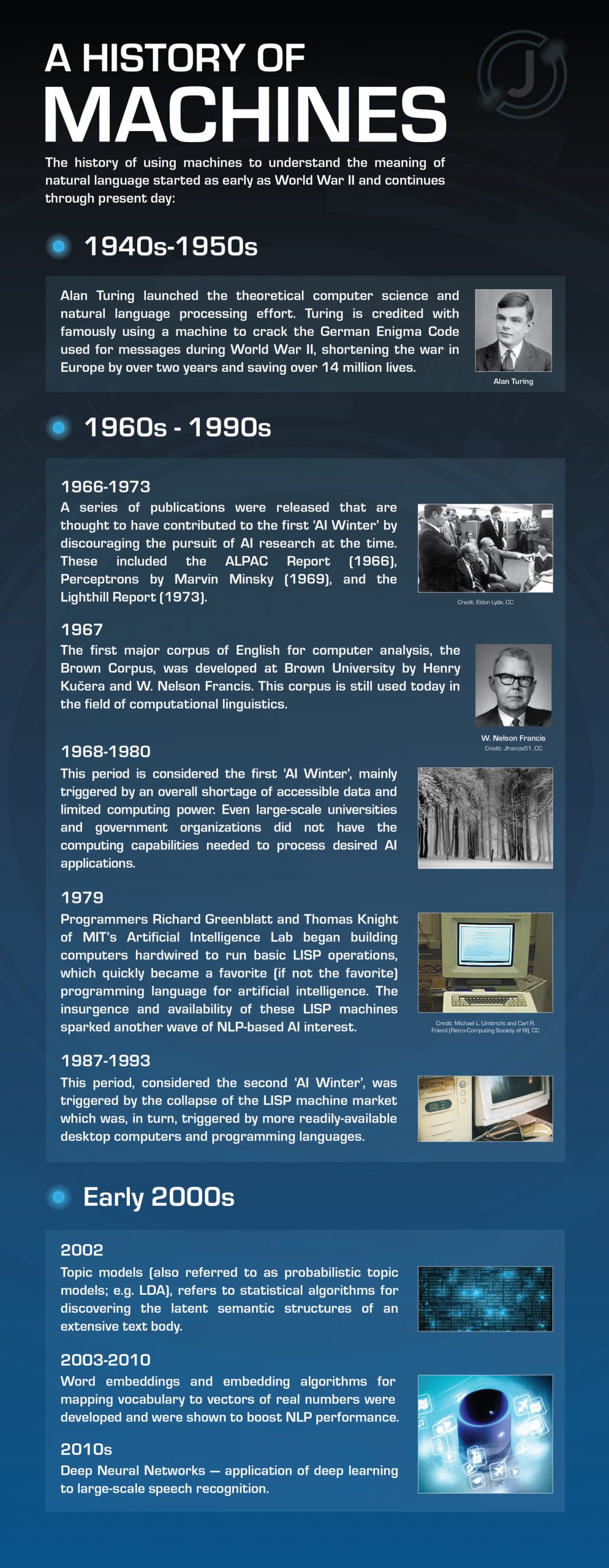

A History of Machines

The history of using machines to understand the meaning of natural language started as early as WWII and continues through present day.

1940s-1950s

- Alan Turing launched the theoretical computer science and natural language processing effort. Turing is credited with famously using a machine to crack the German Enigma Code used for messages during WWII, shortening the war in Europe by over 2 years and saving over 14 million lives.

1960s-1990s

- 1966-1973: A series of publications were released that are thought to have contributed to the first ‘AI Winter’ by discouraging the pursuit of AI research at the time. These included the ALPAC Report (1966), ‘Perceptions’, by Marvin Minsky (1969), and the Lighthill Report (1973).

- 1967: The first major corpus of English for computer analysis, the Brown Corpus, was developed at Brown University by Henry Kučera and W. Nelson Francis. This corpus is still used today in the field of computation linguistics.

- 1968-1980: This period is considered the first ‘AI Winter’, mainly triggered by an overall shortage of accessible data and limited computing power. Even large-scale universities and government organizations did not have the computing power needed to process desired AI applications.

- 1979: Programmers Richard Greenblatt and Thomas Knight of MIT’s Artificial Intelligence Lab began building computers hardwired to run basic LISP operations, which quickly became a favorite (if not the favorite) programming language for artificial intelligence. The insurgence and availability of these LISP machines sparked another wave of NLP-based AI interest.

- 1987-1993: This period, considered the ‘Second AI Winter’, was triggered by the collapse of the LISP machine market, which was, in turn, triggered by more readily available desktop computers and programming languages.

Early 2000s

- 2002: ‘Topic models’ (also referred to as probabilistic topic models; e.g. LDA) refers to statistical algorithms for discovering the latent semantic structures of an extensive text body.

- 2003-2010: Word embeddings and embedding algorithms for mapping vocabulary to vectors of real numbers were developed and were shown to boost NLP performance.

- 2010s: Deep Neural Networks — application of deep learning to large-scale speech recognition.