The F1 score is a metric used to evaluate the performance of a classification model. It balances two key aspects:

- Precision: the proportion of true positive predictions out of all positive predictions made.

- Recall: the proportion of true positive predictions out of all actual positive cases.

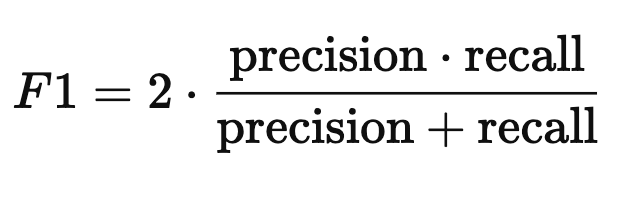

The F1 score is the harmonic mean of precision and recall:

It’s especially useful when you need a balance between precision and recall, and when classes are imbalanced.